This is a story of how a trip to a karaoke bar led to me writing my own app to display presentation slides. Of how a user interface that allows live smartass comments led to me being completely nerd sniped until I was able to do something I’ve never seen in a conference session– however silly the result was.

In the end audience members could send Emoji and other images directly to the slides during the session, which ended up looking like this.

The Innocent Beginning: 360iDev 2018

At 360iDev a few years ago, Jean MacDonald suggested an unofficial group outing. How about a group of us have a karaoke night? VoiceBox Karaoke wasn’t far from the conference, and they had private karaoke rooms. It’d be just the 360iDev group in their own room.

I found the idea of karaoke to be, well, terrifying. So naturally I was in.

VoiceBox has a fun thing where people in your private room can use their phones to post comments that overlay the lyrics. This led to a lot of fun friendly jokes on song lyrics and people’s singing. Usually a few words, or sometimes an Emoji.

The Nerd Sniping: Summer 2019

For the past few years at 360iDev I’ve hosted a session called Stump 360. It started as something ripped off inspired by the “Stump the Experts” session that used to happen at WWDC. It’s a sort of combination game show and panel discussion. As with the original, obscure technical questions are the excuse for the session, but having fun is more important than actually knowing things.

It’s not quite like a usual conference session. Partly because it’s held after hours, offsite at a nearby bar (even though it’s an official conference event). Partly because audience participation is more than encouraged, it’s pretty much necessary. It does have presentation slides, though, which is important for this post.

My friend Shane Cowherd helps out a lot with the planning. He also comes up with fun and sometimes weird technical ideas. And so one day Shane said, wouldn’t it be awesome if the slides at Stump 360 could do something like the lyrics screen at VoiceBox? People could use their phones to add their own commentary to slides, live, during the session.

I knew I had to make it work. Somehow.

So I wrote a custom presentation slide app…

I wondered how the slides could do this. I realized that every presentation slide app was out, because most presentations don’t include bizarre ideas like this. So I’d have to go for a custom slide app of some kind.

I experimented with a fully custom presentation app, with its own scheme for designing and presenting slides. Like most presentations, my slides tend to stick to a few basic layouts. Slide headings, bullet lists, images, and so on, nothing complex. With a little autolayout magic, I could get all of those working. Well probably. I think.

It might have worked. But I had an absolute deadline. Stump 360 was on August 26th, and this was a spare time project. Eventually I passed on this idea and left the slide design to the experts.

Instead I went with using DeckSet to do my slides, as usual. I couldn’t present with DeckSet, so I’d export the slides to PDF, and write a custom app that would display them with PDFKit. That I could get working before the session.

Making Slides Interactive

With a custom slide app I could add extra UI to show messages from the audience. But how could I get those messages? And what kind of messages should I plan on?

Since 360iDev is an iOS developer conference, I knew that everyone would have an iOS device handy. Probably a small fleet of them, really. So what could be better than MultiPeerConnectivity? It would work on everyone’s device, and the peer-to-peer communication would mean that bad Wifi or mobile network trouble didn’t matter. Awesome!

Except… That means everyone would need to install a client app to interact. I could write that easily enough but I’d have to deliver it. Maybe via Testflight? But I didn’t want to have to deal with app review, or start off by trying to convince audience members to download an app. I was working with kind of a weird idea and I needed it to be as easy as possible to participate.

Reluctantly, I decided that I’d be better off with a web page as the audience app. Then I could just put a short URL on my slides, and anyone who wanted to play could dive right in. If network conditions were bad, it might not work very well. But I decided that making it easy to get involved outweighed that risk.

The Client Web App

I used glitch.com for the client web app, since it’s an incredibly convenient way to get something like this running quickly. I wouldn’t have to figure out the technical requirements for the server side (probably not much but I’m not a web developer). The in-browser editor works well, and their concept of remixing existing projects (a bit like a GitHub fork) makes it easy to adapr existing code.

For messaging I went with Firebase real-time database. I don’t know if it’s the best choice for this, but I knew it and was under time pressure, and it did the job. The requirements are pretty simple.

- The client app would send short text messages by creating new entries in the database.

- The slide app would monitor the database to find new messages as they came in.

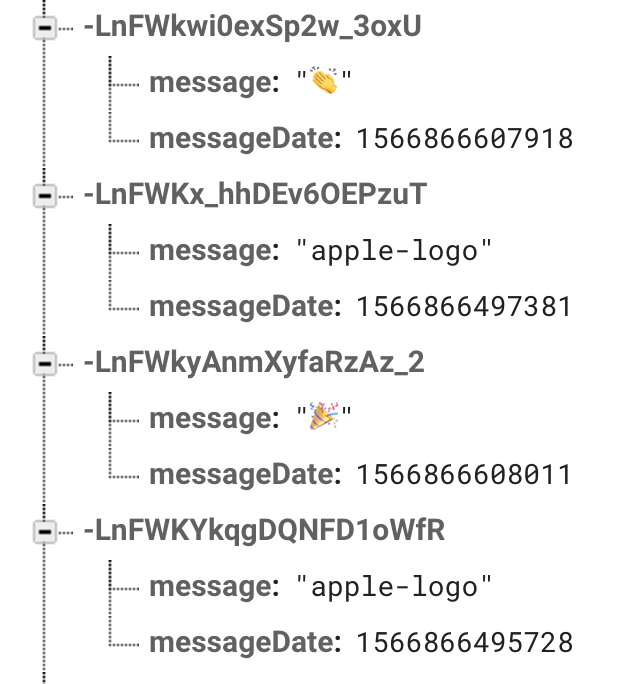

That’s all, a simple one way, many-to-one messaging system. The Firebase data ended up looking like this:

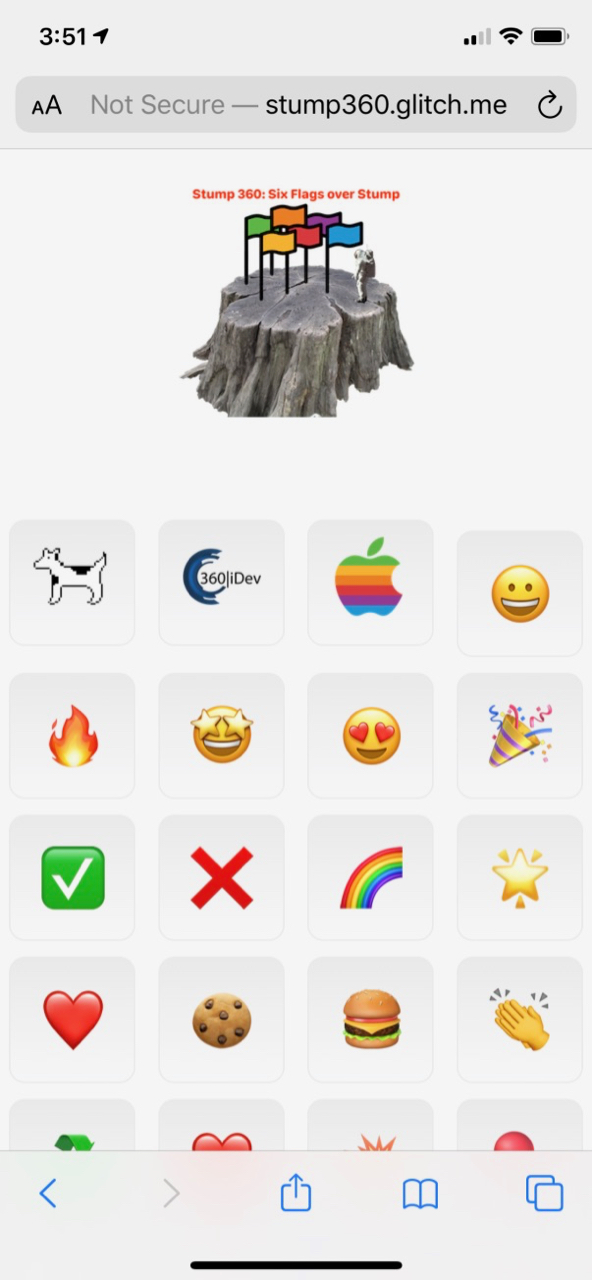

To keep things moving, Shane and I decided on a sort of Emoji keyboard for the client UI. Typing messages would distract too much. Instead people could just tap (or repeatedly mash) and Emoji to send a single-character message.

With a bunch of help from Shane, the UI ended up looking like this:

I added a couple of non-Emoji images related to the conference, in the top row in this screenshot.

Showing Emoji over Slides

Listening for incoming messages in the slide app is a pretty straightfoward application of listening for snapshot updates from Firebase’s real time database. All it takes is initializing Firebase and listening for new messages at the right database location.

FirebaseApp.configure()

var ref: DatabaseReference = Database.database().reference()

var postListRef: DatabaseReference = ref.child("/stumps/")

postListRef.observe(.childAdded) { (snapshot) in

guard let stumpEntry = snapshot.value as? [String:Any] else { return }

guard let messageTimestampMs = stumpEntry["messageDate"] as? Double else { return }

guard (messageTimestampMs/1000 > self.startDate.timeIntervalSince1970) else { return }

guard let message = stumpEntry["message"] as? String else { return }

self.stumpmojiReceived?(message)

}

When the app launches, Firebase’s observe starts by replaying all of the entries so far. History doesn’t matter to this app, so I added the timestamp check to filter out anything from before the current app launch. After that it’s just a matter of taking new incoming messages and passing them to the UI.

To show the incoming Emoji and images, I added a custom view that overlaid the main PDF view showing the slides. Incoming messages are displayed in a UILabel within this view, initially at a random point on the top edge of the screen. For Emoji I just use the incoming text directly, and for the custom images I look up corresponding images in the app.

let messageInitialXPosition = CGFloat.random(in: 20...frame.maxX-20)

let messageView: UIView = {

let view = UILabel(frame: CGRect(x: messageInitialXPosition, y: 0, width: 100, height: 100))

view.text = message

view.font = UIFont.systemFont(ofSize: 60, weight: .heavy)

view.sizeToFit()

view.backgroundColor = .clear

return view

}()

addSubview(messageView)

Non-Emoji buttons used a slightly different approach, using images in the app instead of text.

The labels than animate downward while slowly spinning and fading out.

let messageFinalXPosition = CGFloat.random(in: 20...frame.maxX-20)

let messageFinalYPosition = CGFloat.random(in: frame.maxY ... 1.25*frame.maxY)

let animationTime = TimeInterval.random(in: 5...15)

let animationRotation = CGFloat.random(in: -8*CGFloat.pi...8*CGFloat.pi)

UIView.animate(withDuration: animationTime, animations: {

messageView.frame.origin.y = messageFinalYPosition

messageView.frame.origin.x = messageFinalXPosition

messageView.alpha = 0

messageView.transform = CGAffineTransform(scaleX: 0.5, y: 0.5).concatenating(CGAffineTransform(rotationAngle: animationRotation))

}) { (_) in

messageView.removeFromSuperview()

}

As with pretty much every part of this project, I wasn’t sure if the code was all that great but didn’t have a lot of time to think about it. It worked, so I went with it.

More to Come

After Stump 360 I went back and added some of the things I wanted but didn’t have time for. I plan on doing this again in the future, and I’m not on a tight deadline now. Things like

- Better PDF handling, including some tricks for slide thumbnails.

- Simple sate restoration, so I don’t lose my page.

- A remote control app so I could change slides from my iPhone.

I’ll have some followup posts to look at these in more detail.